Bending time in experiments

Experiments in Eppo take up to 65% fewer days to reach statistical significance, via an econometrics method that has gone mainstream

I wish experiments wouldn’t take so long.

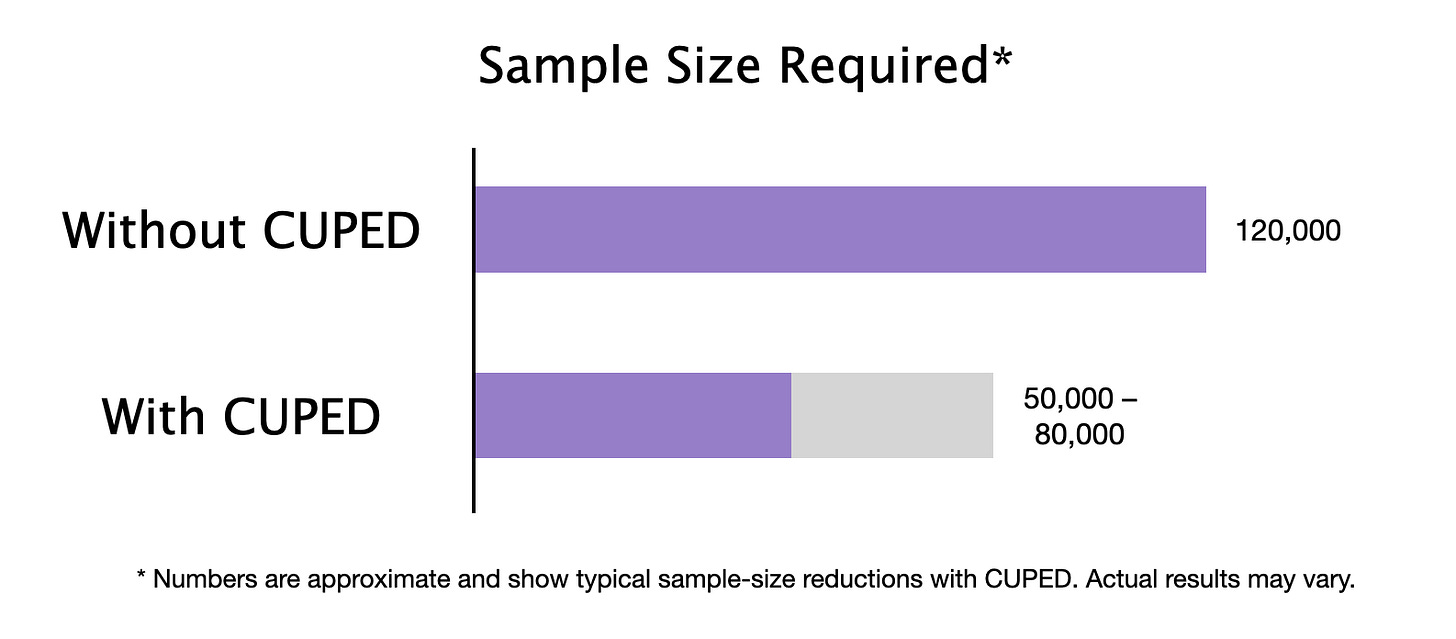

Eppo customers can now conclude experiments up to 65% faster than before. This improvement in experiment run-time is possible thanks to a statistical technique that is mainstream at FAANG companies and decacorns but has never before been available on the commercial market. All Airbnb experiments use this method, and companies like DoorDash, Booking, and Netflix have all done the same. Eppo is the first commercial experimentation tool to offer CUPED variance reduction.

When we first started Eppo, we spoke to 15 product managers at companies across the experiment maturity scale. The most common complaint was that experiments took too long to run. Unless you work at a company with FAANG-scale user traffic, an experiment with standard statistical parameters likely takes over a month to run.

This is a huge pain and requires teams to choose between excruciatingly long experiment run-times or a sky-high false-negative rate. I personally felt this pain while running experiments at Webflow, where activation experiments would take 4 months to converge.

But what if you had a magic wand that would make experiments run faster? With no tradeoffs, at all?

Experiment speed is about having enough data

Many tools on the market advertise faster experimentation. But what exactly is “fast experimentation”?

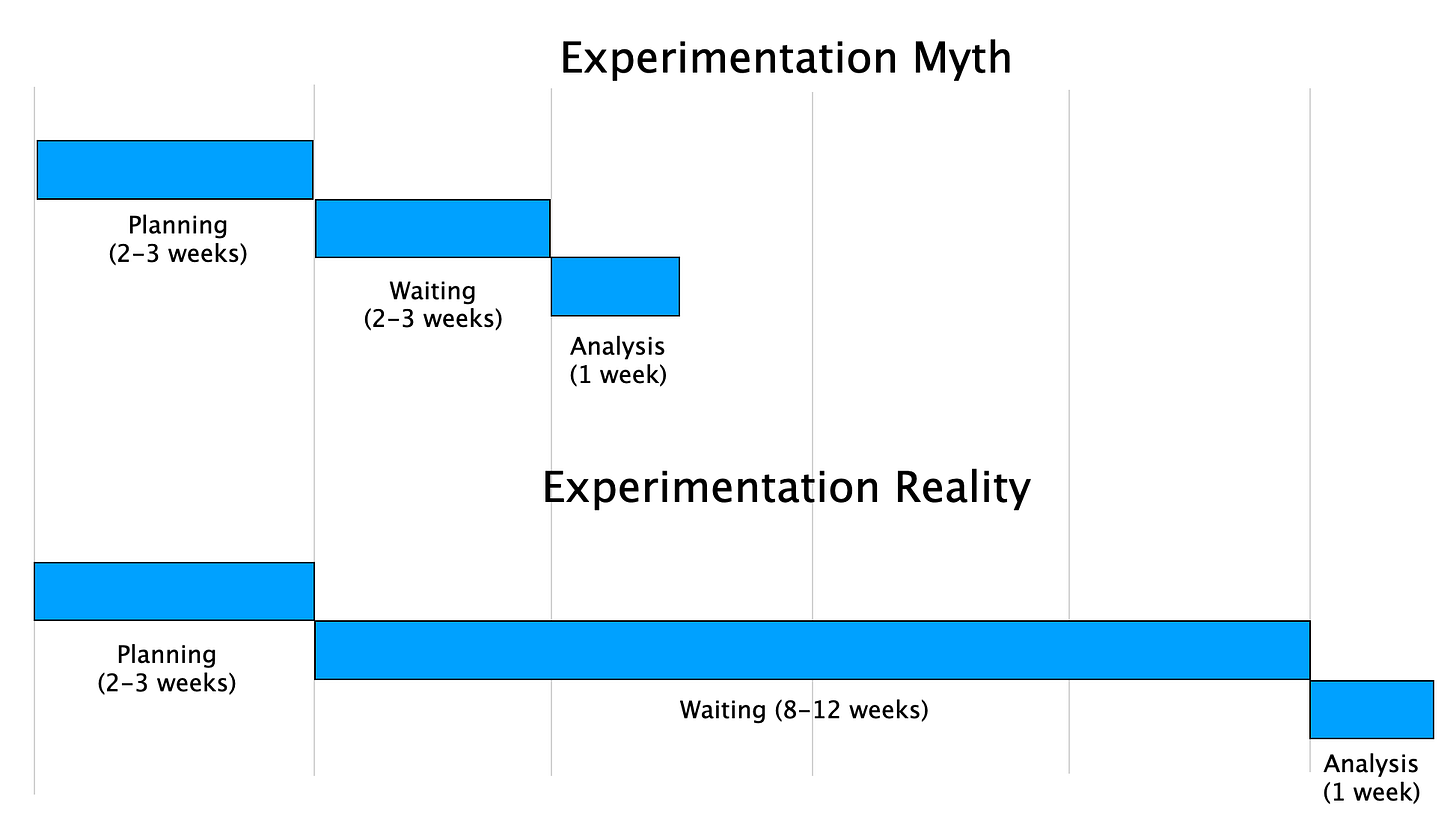

Experiments consist of three distinct phases: planning and development work (including sample-size calculations and user randomization), collecting data, and analysis (including investigation, documentation, and decision-making). No matter how much you speed up the initial set-up and that final analysis, the bulk of experiment time at most companies is dedicated to waiting to reach an appropriate sample size.

Many start-ups find that an experimentation program is simply infeasible when presented with the realities of statistical power analysis. Even mid-sized companies may find that investments into feature flagging and analytics aren’t enabling the true experimentation velocity that they were hoping for. They’re all dressed up and waiting for the sample-size taxi to arrive.

What’s the cost of waiting for experiments to finish? In business terms, a long-running experiment is the same as a long-delayed decision. Organizations that can learn from experiments and react quickly enjoy a tactical advantage over their competitors. Besides being a decisional dead weight, long-running experiments have other technical and cultural ramifications: think of the technical debt incurred by keeping the two code paths running for several weeks or months. Think about whether anyone at the company will be excited to run an experiment if the result is slower than a container ship crossing the Pacific Ocean. Think of all the small wins and little ideas that will go unmeasured and unimplemented because experiments are just too slow.

The scarcest resource in experimentation today isn't tooling or even technical talent. The scarcest resource is time.

Experimentation speed is about creating a feedback loop so that good ideas lead to even better ideas and misguided ideas lead to rapid learning. The faster experiments finish, the tighter that feedback loop gets, creating a compound interest effect on good ideas.

Microsoft first started “bending time” in experimentation

It can feel like A/B experimentation culture has been around forever, but the tech industry’s pervasive, platformed version only existed from the 2010s on, growing up with internet commerce.

In 2013, a team from Microsoft led by Alex Deng wrote a paper “Controlled-experiment using pre-experiment data”, CUPED for short, introducing a new method that could speed up experiments with no tradeoff required. With this method, Microsoft could bend time, making experiments that typically took 8 weeks only take 5-6 weeks. Since that paper, the method has gone mainstream.

Suppose that you are McDonald’s and want to run an experiment to see if you can increase the number of Happy Meals sold by including a menu in Spanish.

A standard approach would be to divide locations into two groups and assign the Spanish menu to one group. The final analysis would compare which group of stores sells more Happy Meals. This is a normal experiment; it will measure impact accurately but takes a long time.

A slightly more sophisticated experiment approach would use the same locations but would introduce the Spanish menu into all of them. The final analysis would compare the year-over-year change in sales instead of the raw number of sales. Mathematically, this takes less time by controlling for natural variation between stores. This is known as a differences-in-differences experiment.

A full CUPED approach doesn’t just use previous year’s sales as a control; it uses all known pre-experiment information. For example, controlling for the following factors might speed up the experiment even more: 1. The number of families (who are more likely to order Happy Meals) that go to each store; 2. Time of day when customers go to each location as morning diners will more likely order a breakfast than a Happy Meal; 3. Stores that were part of a limited launch of the McRib which might draw attention away from Happy Meals.

Each successive method reduces time-to-significance by reducing noise in the experiment data. Just like noise-canceling headphones, CUPED can take out ambient effects to help the experimenter detect impact more clearly.

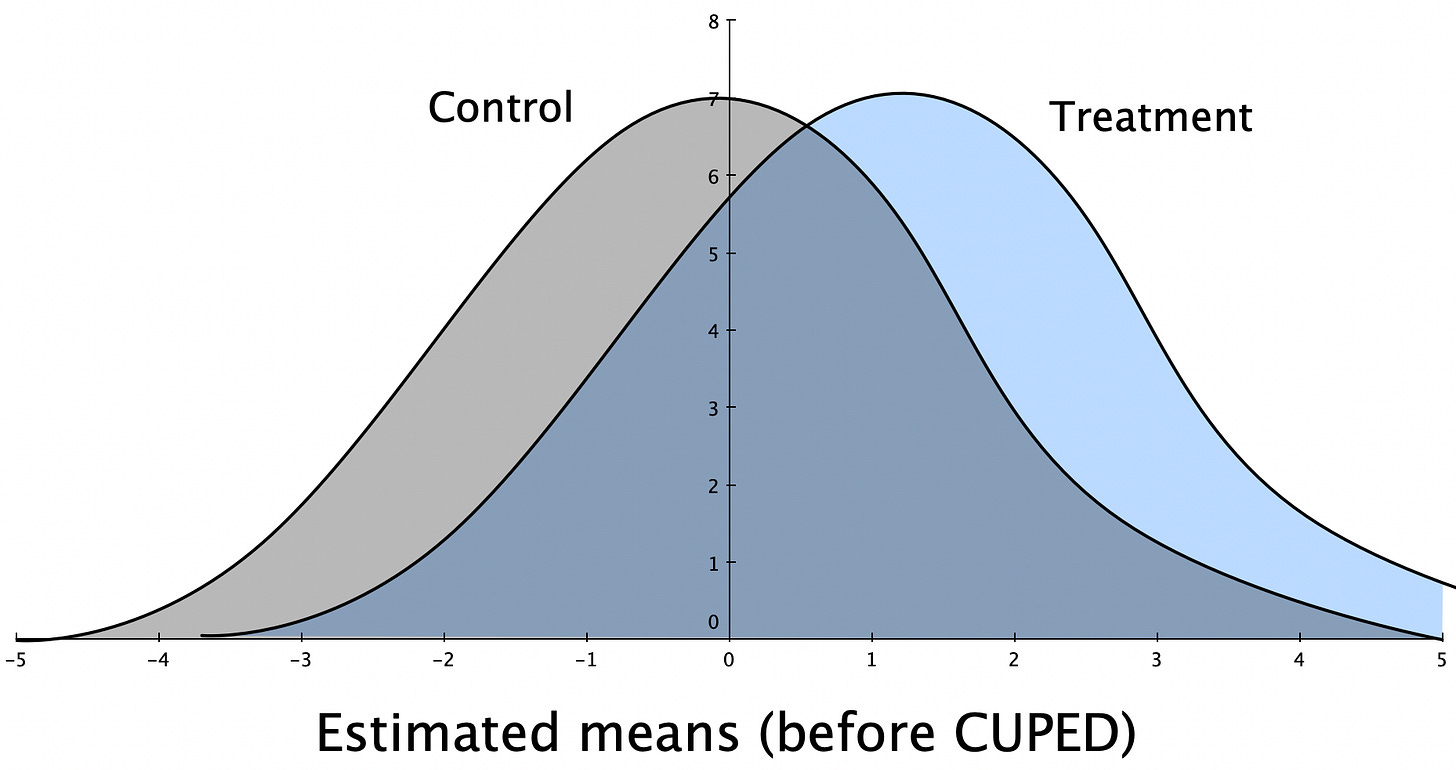

In visual terms, suppose that the treatment-versus-control experiment data looked something like this before applying CUPED:

After applying CUPED, the uncertainty around the averages decreases, like this:

The net effect of sharpening these measurements is that it takes less time to figure out whether an experiment is having a positive impact or not. CUPED is bending time with math – alleviating the largest pain point in experimentation today.

Eppo lets you have world class experimentation infrastructure

Airbnb, Booking, Uber, and Facebook; besides large market capitalizations, what do these companies have in common?

They invested heavily into experimentation infrastructure early in their growth trajectories.

They used this experimentation infrastructure to out-maneuver competition by rapidly learning from customers.

Same results, twice as fast.

Today, experimentation infrastructure is table stakes for any ambitious, product-led growth company. And yet, there is no way to buy Airbnb’s experimentation tools. All you can buy are feature flagging tools masquerading as experimentation platforms, none of which give results that a data or finance team would trust.

Eppo is here to bridge the gap between very large tech companies and everyone else. Our experimentation platform lets you drive revenue and impact instead of clicks and engagements, using the same data warehouse sources as BI tools like Looker/Tableau, architected privacy-first so you don’t have to share any sensitive customer data outside of your cloud, and powered by world-class statistical methods like CUPED.